In recent years, Large Language Models (LLMs) have significantly advanced the field of artificial intelligence, particularly in Natural Language Processing (NLP) and understanding. These models, trained on vast datasets, enable machines to produce human-like text responses. However, their deployment raises critical concerns regarding toxicity, bias, and exploitation by malicious entities. It is imperative for organizations utilizing LLMs to navigate these challenges to ensure ethical and effective AI solutions.

The capabilities of LLMs are accompanied by inherent risks, notably the inadvertent perpetuation of toxic and biased content. Toxicity encompasses the generation of harmful or abusive language, while bias refers to the reinforcement of stereotypes and prejudices. Such issues can result in discriminatory outputs that adversely affect individuals and communities. Addressing these challenges is essential for fostering trust and reliability in AI-driven applications.

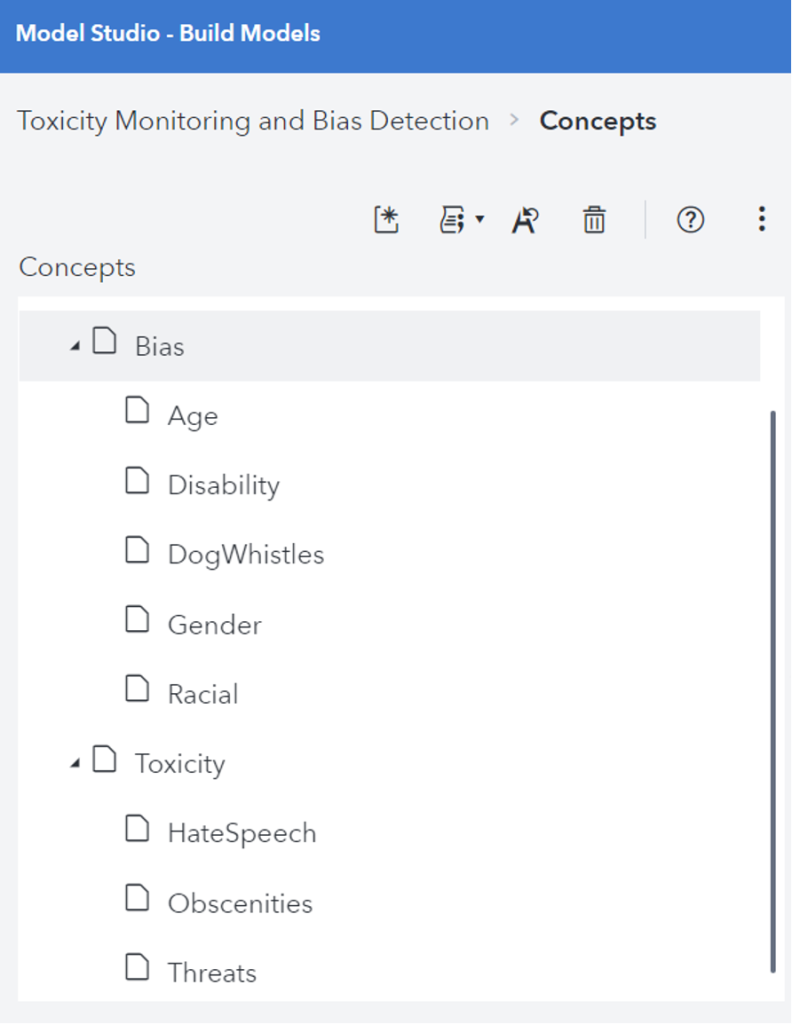

The primary goal outlined in the original post is to manage toxicity and bias within LLM outputs to ensure trustworthy and equitable interactions. Achieving this involves a multifaceted approach that includes:

Addressing toxicity and bias in LLMs presents several advantages:

Despite these advantages, challenges persist, particularly regarding the dynamic nature of language and the emergence of new harmful trends over time. Continuous adaptation and enhancement of moderation systems are crucial to overcoming these obstacles.

As AI technology continues to evolve, the implications for managing toxicity and bias in LLMs are profound. Future developments may include:

Ultimately, the future of LLMs hinges on the commitment of organizations to develop and implement responsible AI practices that prioritize ethical considerations while leveraging the transformative capabilities of these models.

In summary, the integration of LLMs into various applications necessitates a vigilant approach to managing toxicity, bias, and the potential for manipulation by bad actors. By prioritizing data transparency, employing effective content moderation tools, and ensuring continuous human oversight, organizations can cultivate a safer and more equitable AI landscape. The ongoing evolution of AI technologies underscores the need for responsible practices that benefit society while minimizing harm.

Disclaimer

The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly.

Source link :