Introduction Food allergies pose a significant global health challenge, affecting approximately 220 million individuals worldwide. In the United States, about 10% of the population is impacted by at least one food allergy, which adversely affects their physical health and mental well-being. The urgency to address this issue has spurred advancements in biomedical research, notably through the application of artificial intelligence (AI) in understanding and managing food allergies. This convergence of technology and biomedical science presents a promising avenue for enhancing diagnostics, treatments, and preventive strategies. Main Goal and Its Achievement The primary objective of leveraging AI in food allergy research is to advance our understanding of allergenicity and improve therapeutic approaches. Achieving this goal involves developing community-driven projects that integrate AI with biological data to foster collaboration among researchers, clinicians, and patients. By utilizing high-quality datasets, AI can enhance the predictive accuracy of models aimed at identifying allergens and evaluating therapeutic efficacy. Advantages of AI in Food Allergy Research Enhanced Predictive Accuracy: AI models trained on extensive datasets, such as the Awesome Food Allergy Datasets, can accurately predict allergenic proteins by analyzing amino-acid sequences and identifying biochemical patterns. Accelerated Drug Discovery: AI-driven approaches facilitate virtual screening of compounds, significantly reducing the time required for traditional laboratory experiments. This acceleration is made possible through deep learning models that predict binding affinities and drug-target interactions. Improved Diagnostics: Machine learning algorithms can synthesize multiple diagnostic modalities (e.g., skin-prick tests, serum IgE levels) to provide a more accurate estimation of food allergy probabilities, thus improving patient safety by minimizing unnecessary oral food challenges. Real-Time Allergen Monitoring: Advances in natural language processing (NLP) enable the analysis of ingredient labels and recall data, allowing consumers to receive alerts about undeclared allergen risks in near real-time. Comprehensive Data Utilization: The integration of various datasets—ranging from molecular structures to patient outcomes—enhances the understanding of food allergies and informs the development of personalized treatments. Caveats and Limitations Despite these advantages, several caveats must be considered. The success of AI applications in food allergy research is contingent upon the availability of high-quality, interoperable data. Current challenges include data fragmentation and gatekeeping, which hinder collaborations and slow research progress. Additionally, while AI can enhance diagnostic and therapeutic strategies, it cannot replace the necessity of clinical expertise in interpreting results and managing patient care. Future Implications The future of AI in food allergy research holds substantial promise. As AI technologies continue to evolve, they are expected to enable the development of early diagnostic tools, improve the design of immunotherapies, and facilitate the engineering of hypoallergenic food options. These advancements will not only enhance the safety and quality of life for individuals with food allergies but may also lead to innovative approaches in allergen management and prevention. Conclusion The integration of AI into food allergy research represents a transformative opportunity to address a pressing public health issue. By fostering collaborative, community-driven initiatives and leveraging robust datasets, researchers can unlock new insights into allergenicity, ultimately leading to enhanced diagnostic tools and therapeutic options. As this field progresses, the implications for individuals affected by food allergies will be profound, paving the way for safer and more effective management strategies. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

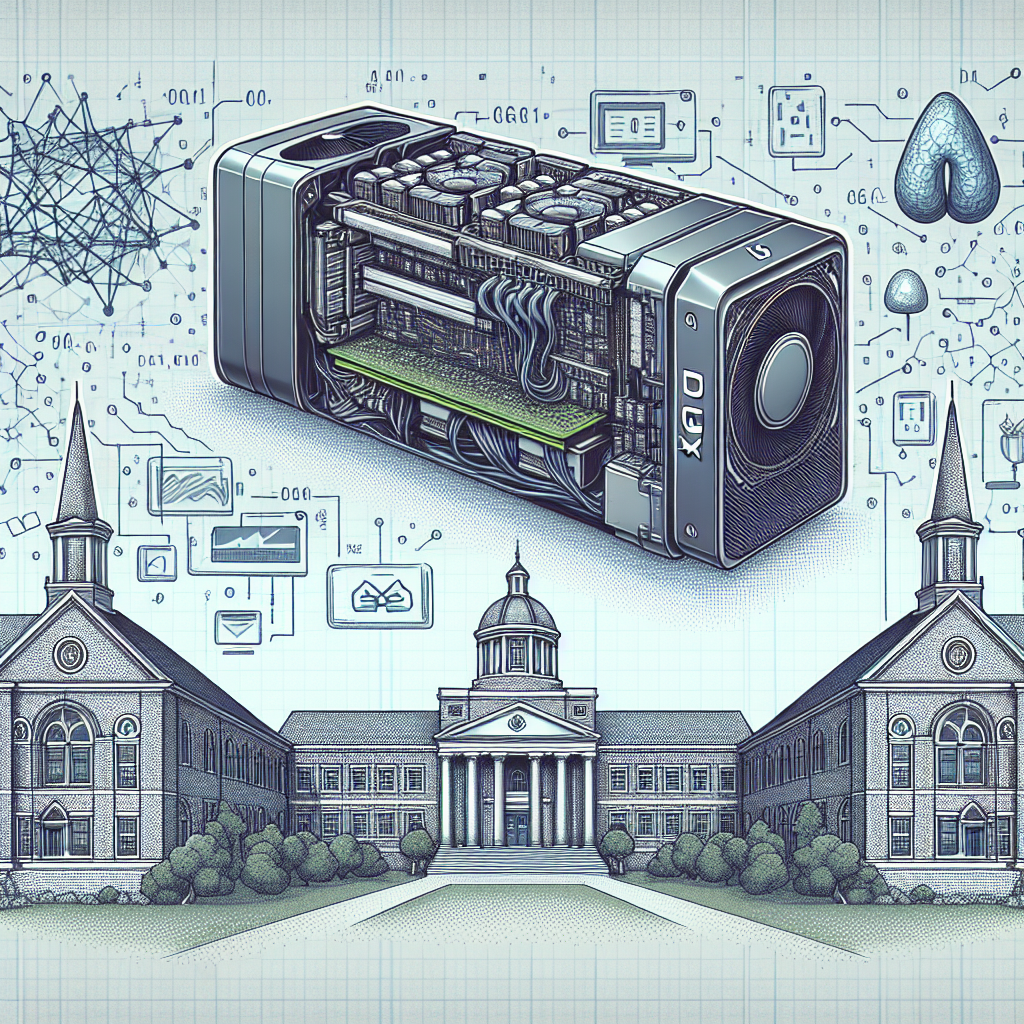

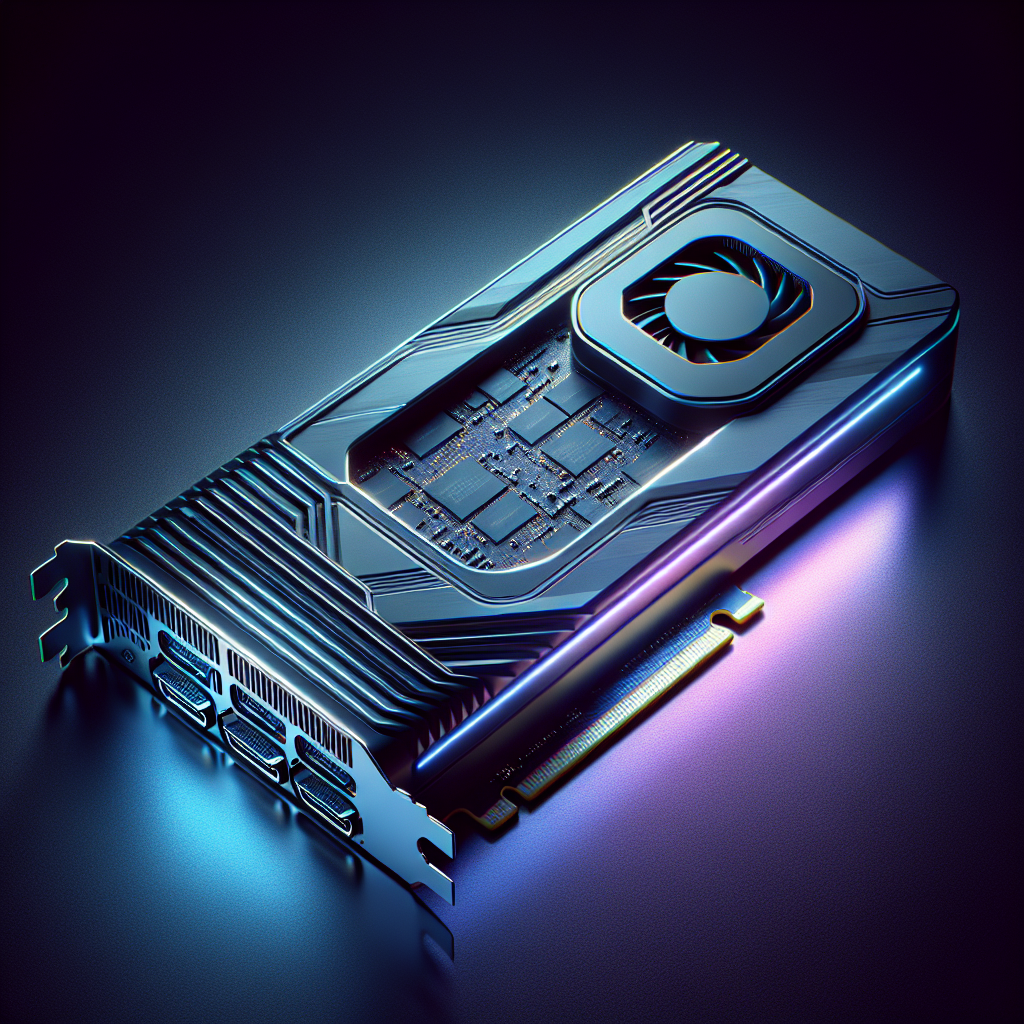

Context of Generative AI Advancements at UC San Diego The Hao AI Lab at the University of California, San Diego (UCSD) is at the forefront of generative artificial intelligence (AI) research, recently enhancing its capabilities with the acquisition of the NVIDIA DGX B200 system. This advanced hardware aims to bolster their work in large language model (LLM) inference, a critical area in the evolution of generative AI technologies. The lab’s innovative research contributes significantly to foundational concepts that underpin many modern AI frameworks, including NVIDIA Dynamo and DistServe, which optimize the performance of generative models. Main Goals and Their Achievement The primary goal of the Hao AI Lab’s recent advancements is to enhance the efficiency and responsiveness of generative AI systems, particularly in the context of LLMs. By leveraging the high-performance capabilities of the DGX B200, researchers aim to accelerate the prototyping and experimentation processes associated with AI model development. This is achieved through the system’s superior processing power, enabling researchers to execute complex simulations and generate outputs more rapidly than previous hardware allowed. Advantages of the NVIDIA DGX B200 for Generative AI Research Increased Processing Power: The DGX B200 boasts one of the most advanced architectures available, which significantly improves the speed of AI model training and inference processes. Enhanced Research Capabilities: The lab is employing the DGX B200 for cutting-edge projects such as FastVideo, which creates video content from textual prompts, and Lmgame, a benchmarking suite for evaluating LLMs through interactive gaming. Real-Time Responsiveness: The system facilitates research into low-latency LLM serving, allowing for applications that require immediate interaction, such as real-time user interfaces. Optimized Resource Management: Utilizing advanced metrics like ‘goodput,’ which balances throughput and latency, the system allows researchers to maximize system efficiency while maintaining user satisfaction. Interdepartmental Collaboration: The DGX B200 serves as a catalyst for cross-disciplinary research initiatives, enhancing collaboration between departments such as healthcare and biology at UCSD. Future Implications of AI Developments The advancements in generative AI facilitated by the NVIDIA DGX B200 signal a transformative era for AI research and applications. As the capabilities of LLMs expand, their integration into diverse fields such as medicine, education, and entertainment is expected to deepen, enhancing user experience and accessibility. Moreover, the ongoing research into optimizing LLM performance through innovative methodologies will likely lead to breakthroughs in how AI systems interact with users, creating more intuitive and responsive applications. However, the field must also navigate challenges such as ethical considerations, data governance, and the potential for bias in AI outputs. As researchers continue to explore the limits of generative AI, maintaining a focus on responsible AI development will be essential to harness its full potential while mitigating risks. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextual Overview In the rapidly evolving landscape of technology, the paradigm of hiring has undergone significant transformation, particularly influenced by the advent of artificial intelligence (AI). Previously, organizations prioritized the recruitment of specialists—individuals with deep expertise in narrowly defined domains such as backend engineering, data science, or system architecture. This strategy was viable in an era characterized by gradual technological evolution, where specialists could leverage their skills to deliver consistent results. However, with AI becoming mainstream, the pace of technological advancement has accelerated dramatically, necessitating a shift towards hiring generalists who possess a more versatile skill set. Redefining Expertise in the Age of AI The integration of AI into various sectors has not only democratized access to complex technical tasks but has also elevated the standards for what constitutes true expertise. Reports from organizations such as McKinsey suggest that by 2030, a substantial portion of U.S. work hours could be automated, prompting a need for workforce adaptability. In this context, the ability to learn and adapt swiftly has superseded the traditional value placed on extensive experience. Companies are now witnessing engineers transition fluidly between roles, reflecting the need for a workforce adept in multiple disciplines as technological challenges become increasingly interdisciplinary. Main Goal and Its Achievement The primary objective articulated in the original discourse is to advocate for the hiring of generalists over specialists in the current AI landscape. This can be achieved by fostering an organizational culture that values adaptability, cross-functional collaboration, and continuous learning. By actively seeking candidates who demonstrate a capacity for quick learning and problem-solving across various domains, organizations can cultivate a workforce that is resilient and responsive to the fast-paced changes characteristic of the AI era. Advantages of Hiring Generalists Increased Flexibility: Generalists can navigate multiple roles, enabling organizations to allocate resources more efficiently and respond to emerging challenges without the constraints of rigid job descriptions. Broader Perspective: With a diverse skill set, generalists can approach problems from various angles, fostering innovation and creativity in solutions. Enhanced Collaboration: Generalists often possess strong communication skills, allowing them to bridge gaps between departments and facilitate teamwork across functional areas. Proactive Problem Solving: The ability to act without waiting for explicit direction empowers generalists to take initiative, driving projects forward in dynamic environments. Preparedness for Future Challenges: As technology continues to evolve, a workforce composed of adaptable generalists is better equipped to embrace change and meet new demands. Limitations and Caveats While the benefits of employing generalists are substantial, organizations must also acknowledge potential limitations. Generalists may lack the depth of knowledge required for highly specialized tasks, which can lead to challenges in fields where intricate expertise is critical. Additionally, the transition to a generalist-focused hiring strategy requires a cultural shift within organizations, which may encounter resistance from existing staff accustomed to traditional specialist roles. Implications for the Future As AI technologies continue to develop, their impact on workforce dynamics will likely intensify. The demand for generalists is expected to grow, prompting organizations to reevaluate their hiring practices and training methodologies. Future implications may include the necessity for ongoing professional development programs designed to cultivate adaptability and interdisciplinary skills among employees. Organizations that embrace this shift will likely find themselves better positioned to navigate the complexities of an AI-driven future, where the ability to pivot and learn swiftly will be paramount. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Introduction In the field of healthcare robotics, the integration of simulation technologies is redefining how developers approach the design, testing, and deployment of robotic systems. Traditional methods have often been hindered by lengthy prototyping cycles and challenges in translating simulated outcomes to real-world applications. Recent advancements, particularly with NVIDIA’s Isaac for Healthcare platform, are addressing these challenges, facilitating a streamlined workflow from simulation to deployment. This blog post aims to elucidate these developments and their implications for Generative AI models and applications within the healthcare sector, particularly focusing on the benefits for GenAI scientists. Main Goal of the Original Post The primary objective of the original blog post is to guide developers in constructing a healthcare robot using the NVIDIA Isaac for Healthcare framework, emphasizing the streamlined transition from simulation to real-world deployment. Achieving this goal involves leveraging the SO-ARM (Surgical Operational Autonomous Robotic Manipulator) starter workflow, which enables developers to collect data, train models, and deploy them effectively in real-world settings. Advantages of the SO-ARM Starter Workflow Reduction in Prototyping Time: The integration of GPU-accelerated simulation allows developers to reduce the prototyping phase from months to days. This acceleration is critical in the fast-paced healthcare environment where time-to-market can significantly impact patient care. Enhanced Model Accuracy: By utilizing a mix of real-world and synthetic data for training, the accuracy of the robotic models is significantly improved. Over 93% of training data can be sourced from simulations, effectively bridging the data gap typically faced in robotics. Safer Innovation: The ability to test and validate robotic workflows in safe, controlled virtual environments minimizes the risks associated with deploying untested systems in actual operating rooms. End-to-End Pipeline: The SO-ARM workflow provides a comprehensive pipeline encompassing data collection, model training, and policy deployment, facilitating a seamless transition from development to real-world application. Versatile Training Techniques: The blended approach of using approximately 70 simulation episodes alongside 10-20 real-world episodes allows for policies that generalize effectively beyond training scenarios, enhancing the robot’s adaptability in diverse environments. Limitations and Caveats While the advancements in the SO-ARM workflow present numerous benefits, several limitations warrant consideration. The reliance on simulation data, although substantial, may not fully capture all real-world complexities, which could affect the robot’s performance in unpredictable scenarios. Additionally, the hardware requirements for deploying these systems can be significant, necessitating investments in advanced computational resources. Future Implications of AI Developments in Healthcare Robotics The trajectory of AI development in healthcare robotics indicates a profound impact on the industry. As generative models evolve, the capacity for these systems to learn from increasingly complex datasets will enhance their operational effectiveness. Future iterations of platforms like NVIDIA’s Isaac for Healthcare are likely to incorporate more sophisticated AI-driven capabilities, allowing for more autonomous decision-making in surgical settings. Additionally, as the technology matures, we can anticipate broader adoption across various healthcare settings, leading to improved patient outcomes and operational efficiencies. Conclusion The advancements facilitated by NVIDIA’s Isaac for Healthcare and the SO-ARM starter workflow are pivotal in transforming the landscape of healthcare robotics. By enabling a streamlined process from simulation to deployment, these technologies not only enhance the speed and accuracy of robotic systems but also pave the way for future innovations in the field. For GenAI scientists, this represents an exciting frontier, combining the power of generative AI with practical applications that can significantly improve healthcare delivery. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextual Framework of Cloud Gaming and Generative AI The landscape of digital entertainment has evolved dramatically, particularly with the advent of cloud gaming platforms such as GeForce NOW. As evidenced by recent releases like Fallout: New Vegas and Hogwarts Legacy, these platforms not only enhance accessibility but also provide gamers with an expansive library of titles without the burdensome need for high-end hardware. This shift is paralleled in the Generative AI Models & Applications sector, where AI technologies are increasingly integrated into gaming to facilitate richer experiences and personalization. The intersection of cloud gaming and AI technology presents a unique opportunity for Generative AI scientists to contribute to and shape this burgeoning field. Main Goals and Achievements The primary goal presented in the original blog post revolves around maximizing the gaming experience through the cloud, thereby ensuring that players can engage with their favorite titles seamlessly and without the constraints typically associated with traditional gaming setups. Achieving this involves leveraging advanced AI algorithms to optimize graphics rendering, reduce latency, and enhance overall gameplay performance. Furthermore, cloud gaming platforms are designed to provide instant access to a variety of games, allowing users to engage with different gaming universes effortlessly. Advantages of Cloud Gaming and AI Integration Accessibility: Cloud gaming platforms allow users to play high-fidelity games on low-end devices, broadening the gaming demographic. Immediate Engagement: Players can quickly switch between games, which is crucial in maintaining user interest and engagement. Enhanced Visuals and Performance: Utilizing AI-driven technologies like NVIDIA’s DLSS 4, gamers can experience superior graphics and smoother gameplay. Seamless Updates and Maintenance: Cloud platforms manage updates automatically, ensuring that users always have the latest version without additional downloads. Cost Efficiency: By eliminating the need for expensive hardware, players can invest in their gaming experience without substantial upfront costs. However, it is essential to consider potential limitations such as reliance on internet connectivity, which may affect performance in areas with inconsistent bandwidth. Future Implications of AI Developments in Gaming The integration of AI in gaming is poised to evolve significantly in the coming years. As advancements in Generative AI continue, we can expect more personalized gaming experiences that adapt to individual player preferences and skill levels. Future developments may also include enhanced AI-driven NPC behaviors, leading to more immersive and dynamic in-game environments. Furthermore, the ongoing refinement of cloud infrastructure is likely to reduce latency issues, making real-time interactions more fluid and responsive. This will not only improve player satisfaction but may also attract a larger audience to the gaming world. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

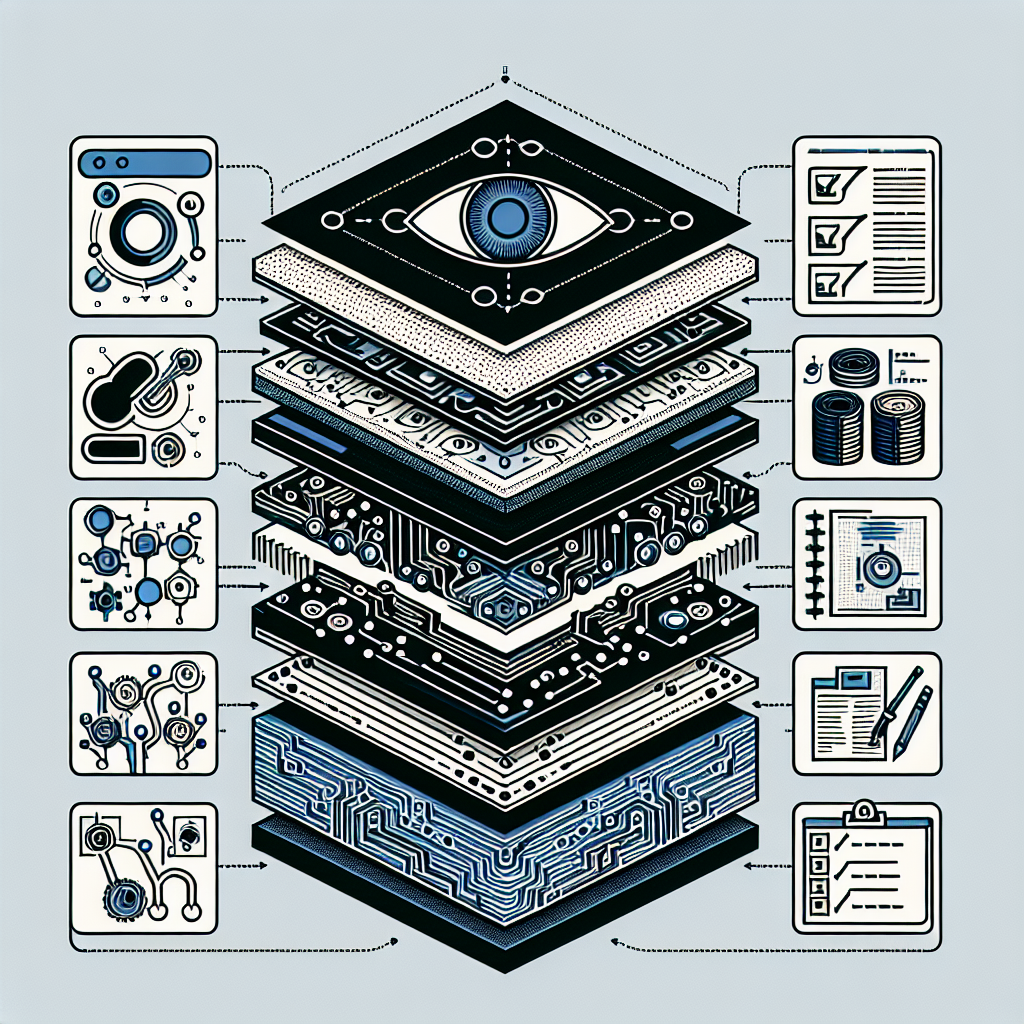

Introduction The recent launch of FunctionGemma by Google marks a pivotal development within the realm of Generative AI models and applications. As the industry continues to explore advancements in artificial intelligence, particularly within mobile environments, FunctionGemma emerges as a specialized solution aimed at enhancing reliability and efficiency in application development. This blog post will contextualize the significance of FunctionGemma, elucidate the main goals of its deployment, outline its advantages, discuss potential limitations, and reflect on future implications for AI technologies and their impact on Generative AI scientists. Contextualizing FunctionGemma FunctionGemma is a compact AI model comprising 270 million parameters, specifically designed to address one of the most pressing challenges in modern application development: achieving reliability at the edge. Unlike traditional general-purpose chatbots, FunctionGemma focuses on a singular purpose—translating natural language commands into executable code for applications and devices, all while operating independently of cloud connectivity. This strategic pivot by Google emphasizes a growing trend towards the utilization of Small Language Models (SLMs) that can run locally on diverse devices such as smartphones, browsers, and IoT systems. For AI engineers and enterprise builders, FunctionGemma represents a novel architectural paradigm—a privacy-centric “router” capable of executing intricate logic on-device with minimal latency. Main Goal and Achievement Mechanism The primary objective of FunctionGemma is to bridge the “execution gap” prevalent in generative AI applications. Standard large language models, while effective in conversation, often falter when tasked with initiating specific software actions on resource-constrained devices. FunctionGemma seeks to remedy this by offering a fine-tuned model that significantly increases accuracy in function calling tasks, thus enhancing the device’s capability to interpret and execute user commands reliably. Achieving this goal involves training the model on a dedicated dataset, optimizing it specifically for mobile applications, and ensuring its seamless integration with existing development frameworks. Advantages of FunctionGemma 1. **Enhanced Accuracy**: Initial evaluations indicated that generic small models achieved a mere 58% accuracy in function calling tasks. Upon fine-tuning, FunctionGemma demonstrated an impressive accuracy rate of 85%, indicating its ability to perform comparably to larger models while operating efficiently on local devices. 2. **Local Execution**: By processing commands on-device, FunctionGemma minimizes latency, ensuring that actions are executed instantaneously without the delays associated with server communication. This capability is particularly advantageous in applications requiring real-time responses. 3. **Privacy and Data Security**: The local execution model means that sensitive personal data, such as contacts and calendar entries, remains on the user’s device, significantly reducing privacy risks associated with cloud-based processing. 4. **Cost Efficiency**: Developers utilizing FunctionGemma circumvent the costs associated with per-token API fees that are common in larger cloud models. This makes FunctionGemma an economically viable option for simple interactions and reduces operational expenses for enterprises. 5. **Versatility and Compatibility**: FunctionGemma is designed to integrate seamlessly with various development ecosystems, including Hugging Face Transformers and NVIDIA NeMo libraries, allowing for a broad range of applications and use cases. Limitations and Caveats While FunctionGemma presents numerous advantages, it is essential to consider certain limitations. The model’s performance is contingent on its specific training for function calling tasks, meaning that its effectiveness may diminish if applied to broader, less-defined use cases. Moreover, although Google markets FunctionGemma as an “open model,” it operates under custom licensing terms that impose restrictions on certain uses, which may limit its applicability in some contexts. Future Implications The introduction of FunctionGemma signals a shift towards more localized AI solutions, potentially reshaping the landscape of application development. As AI technologies continue to evolve, the emphasis on small, efficient models capable of operating independently of cloud infrastructures could lead to a wider adoption of edge computing paradigms. For Generative AI scientists, the implications are profound; the focus on privacy-first approaches and the need for reliable, deterministic outputs will likely drive innovations in AI model design and deployment. As enterprises seek to mitigate compliance risks and enhance user privacy, the demand for models like FunctionGemma that can operate effectively on-device is expected to rise, fundamentally transforming how AI applications are developed and deployed in various sectors. Conclusion FunctionGemma represents a significant advancement in the field of Generative AI, offering a specialized solution that enhances reliability, privacy, and cost-effectiveness in application development. As the landscape of AI technology continues to evolve, its implications for Generative AI scientists and the broader industry will be profound, paving the way for a new era of localized, efficient AI applications. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Context The contemporary landscape of artificial intelligence (AI) and generative AI models is experiencing significant transformations, especially concerning the global compute infrastructure. Historically dominated by U.S. technologies, the AI chip market is witnessing a paradigm shift as China accelerates its domestic chip development in response to U.S. export controls. This evolution is pivotal for GenAI scientists, who are increasingly relying on domestic chip capabilities for innovative AI model training and deployment. Main Goal and Its Achievement The primary objective of the evolving compute landscape is to foster a self-sufficient and competitive AI ecosystem, particularly within China. This goal can be achieved through the development of robust domestic chip technology that can effectively support the training and inference of generative AI models. U.S. export restrictions on advanced chips have paradoxically catalyzed innovation within China, prompting local companies to enhance their capabilities and reduce reliance on foreign technology. Structured List of Advantages Increased Innovation: The urgency imposed by U.S. restrictions has led to rapid advancements in AI chip technology in China. Firms like Huawei and Cambricon are producing chips that support high-performance AI models, which can be seen in the deployment of models trained on these domestic chips. Cost-Effectiveness: Domestic chips are not only sufficient but also increasingly optimized for various AI applications. The development of cost-efficient models, such as DeepSeek’s offerings, demonstrates lower operational costs, making sophisticated AI solutions accessible to a broader range of organizations. Collaborative Ecosystem: The synergy between chip manufacturers and AI developers has fostered an open-source culture that encourages knowledge sharing. This collaborative environment enhances model efficiency and reduces the barriers to entry for new AI applications. Resilience Against Supply Chain Disruptions: By building a domestic chip ecosystem, China mitigates risks associated with reliance on foreign technology, thereby ensuring a more stable supply of necessary components for AI development. Global Competitiveness: As Chinese chips gain prominence, more models are becoming optimized for local hardware, creating a competitive edge in the global AI landscape. This shift is reshaping expectations for AI training and deployment methodologies. Caveats and Limitations While the advancements in the domestic chip industry are promising, there are caveats. The rapid pace of innovation could lead to disparities in quality and performance compared to established players like NVIDIA. Furthermore, the transition to a self-sufficient ecosystem will require sustained investment and regulatory support to address potential market fluctuations. Future Implications The implications of these developments for generative AI and its scientists are profound. As domestic chips become more capable, we can anticipate a shift in the AI research ecosystem towards models that leverage local architectures. This could lead to novel AI methodologies and applications tailored to regional needs, ultimately creating a diverse landscape of AI solutions. Additionally, the growing emphasis on open-source collaboration may result in more democratized access to advanced AI tools, fostering innovation across various sectors. Conclusion The shifting global compute landscape presents both opportunities and challenges for GenAI scientists. As domestic capabilities grow and the reliance on foreign technologies diminishes, it is crucial for researchers and developers to adapt to these changes. By leveraging the advancements in domestic chips and fostering collaborative environments, the future of generative AI can be shaped to meet a broader range of applications and societal needs. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Introduction In the rapidly evolving landscape of artificial intelligence (AI), fostering an environment of psychological safety has emerged as a critical factor for organizational success. As highlighted by Rafee Tarafdar, executive vice president and chief technology officer at Infosys, the fast-paced nature of technological advancement necessitates a culture that encourages experimentation and accepts the possibility of failure. This blog post aims to contextualize the relationship between psychological safety and AI implementation, drawing insights from a survey conducted by MIT Technology Review Insights involving 500 business leaders. The Central Goal of Psychological Safety in AI Implementation The primary goal established in the original discussion is to create a workplace culture that prioritizes psychological safety, thereby enhancing the potential for successful AI initiatives. This can be achieved through a comprehensive, systems-level approach that integrates psychological safety into the core operations of an organization, rather than relegating it solely to human resources (HR). Such integration is vital in dismantling the cultural barriers that often hinder innovation and experimentation within an enterprise. Advantages of Fostering Psychological Safety in AI Initiatives 1. **Enhanced Success Rates of AI Projects**: Evidence indicates that organizations with a culture conducive to experimentation report significantly higher success rates in their AI projects. According to the survey, 83% of executives believe that a focus on psychological safety directly correlates with improved outcomes in AI initiatives. 2. **Open Communication and Feedback**: A notable 73% of respondents expressed feeling safe to provide honest feedback and share their opinions freely. This level of openness can lead to more innovative solutions and a collaborative atmosphere essential for effective AI research and development. 3. **Reduction of Psychological Barriers**: The survey revealed that psychological barriers, rather than technological challenges, are often the most significant obstacles to AI adoption. Approximately 22% of leaders admitted to hesitating in leading AI projects due to fears of negative repercussions from potential failures. Addressing these concerns through a culture of psychological safety can alleviate anxiety and encourage proactive leadership in AI endeavors. 4. **Continuous Improvement of Organizational Culture**: Less than half of the surveyed leaders rated their organizations’ psychological safety as “very high,” with 48% reporting a “moderate” level. This indicates an ongoing opportunity for improvement, suggesting that as organizations strive to enhance psychological safety, they can simultaneously reinforce their cultural foundations, which are crucial for sustainable AI adoption. Future Implications of Psychological Safety in AI Development The implications of cultivating psychological safety within organizations are profound, particularly as AI technologies continue to advance at an unprecedented pace. As the demand for innovative solutions grows, organizations that successfully implement psychological safety will likely gain a competitive advantage in the market. Future developments in AI will necessitate a workforce that is not only technically skilled but also empowered to take risks and engage in creative problem-solving without fear of retribution. As AI continues to reshape industries, the emphasis on psychological safety will likely become increasingly important. Organizations that embrace this principle will be better positioned to adapt to technological changes, harness diverse perspectives, and drive meaningful innovations in AI research and applications. Conclusion In summary, establishing psychological safety is vital for organizations navigating the complexities of AI implementation. By fostering an environment where employees feel secure in expressing their ideas and taking calculated risks, enterprises can enhance their capabilities in AI research and innovation. As the landscape of AI continues to evolve, the interplay between psychological safety and organizational culture will undoubtedly shape the future of technological advancement and enterprise success. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextualizing the NVIDIA RTX PRO 5000 72GB Blackwell GPU for Generative AI The advent of the NVIDIA RTX PRO 5000 72GB Blackwell GPU marks a significant milestone in the evolution of desktop computing for AI professionals, including developers, engineers, and designers. With robust capabilities for agentic and generative AI applications, this GPU is now widely accessible, providing an essential resource for memory-intensive workflows. The introduction of this advanced hardware comes at a critical juncture when the demand for high-performance computing resources is surging, particularly among AI developers who require scalable solutions that can accommodate diverse project requirements. Main Goal and Achievement Path The primary objective of the NVIDIA RTX PRO 5000 72GB is to enhance the memory capacity and computational power available to professionals engaged in AI development. This goal is realized through the GPU’s ability to support larger models and more complex workflows, essential for advancing generative AI applications. By offering a choice between the 72GB and the 48GB variants, developers can tailor their systems to meet specific project needs and budget constraints, thereby optimizing resource allocation and operational efficiency. Advantages of the NVIDIA RTX PRO 5000 72GB Increased Memory Capacity: The 72GB of GDDR7 memory provides a 50% increase over the 48GB variant, facilitating the handling of larger datasets and more complex AI models, which is critical for maintaining high performance in generative AI workflows. Enhanced AI Performance: The GPU delivers 2,142 TOPS of AI performance, addressing the computational demands of cutting-edge applications, including large language models and multimodal AI systems. Significant Performance Improvements: Benchmarks reveal that the RTX PRO 5000 72GB achieves 3.5 times the image generation performance and double the text generation performance compared to previous generations, underscoring its efficiency in processing intensive tasks. Real-time Rendering Capabilities: In applications such as computer-aided engineering and creative design, the GPU reduces rendering times by up to 4.7 times, enabling quicker iterations and enhanced productivity. Cost Efficiency and Data Privacy: By empowering developers to run models locally, the RTX PRO 5000 allows teams to maintain data privacy and reduce dependence on expansive data-center infrastructures, thus lowering operational costs. Future Implications of AI Developments The continuous evolution of AI technologies, particularly in the realms of generative and agentic AI, suggests that the demand for advanced computational resources will only increase. The NVIDIA RTX PRO 5000 72GB is poised to play an instrumental role in this future landscape, providing the necessary hardware to support increasingly sophisticated AI applications. As industries integrate AI across various domains—from design to robotic applications—the capability to leverage high-performance GPUs like the RTX PRO 5000 will be pivotal in driving innovation and efficiency. Moreover, as AI models grow in complexity, the emphasis on memory capacity and processing speed will become even more critical, ensuring that developers and scientists remain equipped to tackle the challenges ahead. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextual Background on Enterprise AI Development The evolution of enterprise AI solutions is significantly influenced by the foundational challenges posed by the prevailing dynamic landscape of artificial intelligence. As articulated by the leadership at Palona AI, the metaphor of “building on a foundation of shifting sand” encapsulates the difficulties faced by startups in the generative AI sector. Palona AI, a Palo Alto-based startup spearheaded by veterans from Google and Meta, has recently embarked on a focused vertical integration into the restaurant and hospitality industries with the introduction of its innovative platforms: Palona Vision and Palona Workflow. This strategic pivot marks a shift from the company’s initial broader approach, which sought to harness emotional intelligence for diverse direct-to-consumer enterprises. By concentrating on a “multimodal native” approach within a specific industry, Palona provides a vital reference model for AI developers aiming to transcend superficial implementations and tackle substantial real-world operational challenges. Main Goal and Achieving Success in the AI Landscape The primary goal highlighted in Palona’s narrative is to establish a robust AI infrastructure that integrates multiple modalities—vision, voice, and text—into a cohesive operational system tailored to the nuances of the restaurant sector. This objective can be achieved through a focused vertical strategy that enables companies to leverage deep domain expertise and proprietary data. By prioritizing the development of systems that can seamlessly process and analyze diverse operational signals, such as customer interactions and kitchen dynamics, AI builders can create solutions that not only enhance operational efficiency but also improve customer experience significantly. Advantages of a Vertical Strategy in AI Development Enhanced Operational Efficiency: By adopting a vertical focus, Palona’s systems—Vision and Workflow—automate and optimize restaurant operations, leading to significant time savings and reduced errors. Contextual Intelligence: The integration of multimodal data processing enables the AI to understand context better, thus facilitating timely interventions that enhance service quality. Adaptability to Industry-Specific Challenges: The customized approach allows for the development of solutions that address unique operational challenges faced by the restaurant industry, from managing order fulfillment to maintaining cleanliness. Access to Proprietary Data: A focused vertical strategy opens avenues for acquiring domain-specific training data, which is crucial for developing high-performing AI models. Resilience Against Vendor Dependency: By creating an orchestration layer that allows for the dynamic swapping of AI models, companies can mitigate risks associated with reliance on a single vendor, thus enhancing operational stability. Future Implications of AI Developments in Specialized Domains The trajectory of AI development within specialized domains such as restaurant operations indicates a significant shift towards more integrated, intelligent systems capable of real-time decision-making. As the capabilities of AI continue to expand, it is likely that more industries will adopt similar specialized “operating systems” tailored to their specific needs. This evolution will enhance the ability of enterprises to respond to operational challenges swiftly and accurately, thereby improving both customer satisfaction and operational efficiency. Moreover, as AI systems become more adept at handling complex, real-world scenarios, the role of human operators will evolve, allowing them to concentrate on higher-level tasks while relying on AI systems for routine operational management. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here