Contextualizing the Use of Legal AI in Modern Law Practice The adoption of artificial intelligence (AI) within the legal sector has gained significant traction, prompting law firms and in-house legal teams to reevaluate their operational models. The motivations behind this trend are multifaceted, ranging from enhancing efficiency to improving client service delivery. A comprehensive understanding of these motivations is essential for legal professionals aiming to harness the potential of AI technology effectively. This analysis is structured around four primary motivations for employing legal AI, emphasizing their respective impacts on return on investment (ROI) and operational effectiveness. Main Goals for Implementing Legal AI The primary objective for law firms utilizing legal AI is to enhance operational efficiency while maintaining or improving client service quality. This goal can be achieved by integrating AI tools into various aspects of legal workflows, thereby optimizing processes and reallocating human resources to higher-value tasks. It is essential for legal professionals to identify specific areas within their practice that can benefit from AI implementation, ensuring that the technology aligns with their strategic objectives. Advantages of Using Legal AI Reduction of Unbillable Work: Legal AI tools can automate repetitive and low-value tasks, allowing lawyers to focus on billable activities. This shift not only maximizes efficiency but also enhances profitability by minimizing the time spent on non-revenue-generating work. Marketing and Performative Benefits: The introduction of AI technologies can serve as a marketing tool, showcasing a firm’s commitment to innovation. While the direct financial ROI may be limited, the enhanced brand image can attract potential clients and reinforce existing relationships. Capacity Expansion: Law firms facing high demand can leverage AI to increase their output without the need for additional hires. This approach enables firms to manage workloads more effectively, ensuring timely service delivery while controlling operational costs. Enhanced Efficiency for In-House Teams: In-house legal departments can utilize AI to automate routine tasks, thereby improving overall productivity. The ability to quickly process large volumes of information allows in-house lawyers to allocate their time to more strategic initiatives, ultimately benefiting their organizations. Workflow Redesign: A strategic integration of AI into legal workflows can yield substantial efficiency gains. By rethinking traditional processes and embedding AI at the core, legal professionals can achieve significant improvements in service delivery and client satisfaction. However, it is important to note that the effectiveness of these advantages may vary based on the specific context of each law firm or in-house team. Moreover, firms that fail to align their AI strategies with clear operational goals may encounter challenges in demonstrating tangible ROI. Future Implications of AI in the Legal Sector As AI technology continues to evolve, its implications for the legal industry are profound. The integration of advanced AI systems is likely to shift the landscape of legal practice, compelling firms to adapt continuously. Future developments may enable even greater automation of complex legal processes, reducing the reliance on traditional legal expertise. This evolution will necessitate a redefinition of roles within law firms and in-house teams, as legal professionals will need to develop new skills to work alongside AI systems effectively. Moreover, the potential for AI to facilitate access to legal services for a broader audience could reshape the market dynamics. As AI systems become more sophisticated, they may provide reliable legal outputs at lower costs, making legal assistance more accessible to individuals and small businesses. This shift could challenge traditional law firm revenue models, compelling firms to innovate in their service offerings. Conclusion The integration of AI in the legal sector presents both opportunities and challenges. By focusing on defined goals and leveraging the advantages outlined, legal professionals can navigate the complexities of this technological revolution. Ultimately, the success of AI implementation will hinge on the ability to align technology with strategic objectives, ensuring that it serves to enhance, rather than replace, the critical role of human expertise in legal practice. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Introduction The pervasive question concerning the state of artificial intelligence (AI) is whether we are experiencing an “AI bubble.” However, this inquiry is fundamentally flawed; the more pertinent question is: which specific AI bubble exists, and what are the respective timelines for their potential collapse? The debate surrounding AI as either a revolutionary technology or an economic hazard has intensified, with industry leaders acknowledging the presence of distinct financial bubbles within the sector. Recognizing the multifaceted nature of the AI ecosystem is crucial, especially as its various segments exhibit disparate economic dynamics, risks, and timelines for disruption. The Multi-Layered AI Ecosystem The AI landscape is not a singular entity but rather a composite of three distinct layers, each characterized by unique economic frameworks and risk profiles. Understanding these layers is essential for stakeholders, particularly those involved in the development and application of Generative AI models. The implications of these distinctions extend beyond mere market analysis; they influence strategic decision-making for GenAI scientists and developers as they navigate this rapidly evolving field. Main Goal and Achieving It The primary objective articulated in the original post is to elucidate the complexities of the AI landscape, emphasizing that not all segments are equally vulnerable to market fluctuations. This understanding can be achieved by dissecting the three layers of the AI ecosystem—wrapper companies, foundation models, and infrastructure providers. Recognizing the differing timelines and economic realities of these segments allows stakeholders to make informed decisions, thereby positioning themselves strategically to capitalize on opportunities while mitigating risks associated with each layer. Advantages of Understanding AI Layers Informed Decision-Making: By identifying the specific layer of the AI ecosystem one operates within, GenAI scientists can tailor their strategies accordingly, optimizing resource allocation and investment decisions. Anticipation of Market Trends: Understanding the timelines associated with each layer enables scientists and developers to anticipate potential market shifts, facilitating proactive adjustments to their strategies. Enhanced Innovation: Awareness of the competitive dynamics within each layer can drive innovation, as stakeholders seek to differentiate their offerings in a crowded market. Strategic Partnerships: Recognizing the interplay between the layers may foster collaborative opportunities among companies operating in different segments, creating synergies that enhance value creation. Risk Mitigation: By understanding the vulnerabilities inherent in wrapper companies versus the stability of infrastructure providers, GenAI scientists can better navigate potential pitfalls, ensuring their projects are resilient to market fluctuations. Limitations and Caveats While the advantages of understanding the multilayered AI ecosystem are significant, several limitations must be acknowledged. For instance, the rapid pace of technological advancement may lead to unforeseen disruptions that challenge existing categorizations. Furthermore, the interconnectedness of the layers may blur the lines of distinction, complicating strategic decision-making. Lastly, while the infrastructure layer may appear stable, it is not immune to market pressures and could face challenges related to overbuilding and underutilization in the short term. Future Implications for Generative AI The trajectory of AI developments will have profound implications for Generative AI models and applications. As the industry matures, the differentiation between the various layers will likely become more pronounced, shaping competitive dynamics and influencing investment flows. GenAI scientists must remain vigilant to these trends, as the evolution of foundation models may lead to increased commoditization, compelling developers to innovate continually to maintain competitive advantages. Moreover, the consolidation of foundation model providers could result in fewer dominant players, further shaping the landscape of available technologies and resources. Consequently, as AI infrastructures continue to expand and evolve, they will serve as the backbone for an array of future applications, reinforcing the necessity for GenAI scientists to adapt their strategies in alignment with these developments. Conclusion In summary, the question of whether we are in an AI bubble is overly simplistic; it is imperative to recognize the nuanced layers within the AI ecosystem, each with its own economic realities and timelines. By gaining clarity on these distinctions, GenAI scientists can navigate the complexities of the industry more effectively, positioning themselves for success amidst the evolving landscape of artificial intelligence. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Context The legal profession is currently experiencing a seismic shift due to advancements in artificial intelligence (AI) and automation technologies. As organizations seek innovative solutions to enhance efficiency and accuracy in legal research, the introduction of AI has prompted a surge of interest and investment in LegalTech. This blog post serves as a companion to the launch of The Geek in Review Substack page, which aims to disseminate insights and narratives exploring the intersection of AI and the legal industry. It is important to understand how these technologies have evolved over time, particularly in the realm of legal research tools, and why they are finally beginning to deliver substantial improvements after a period of stagnation. Main Goal of the Original Post The primary objective of the original blog post is to elucidate the transformative journey of legal AI technologies, particularly focusing on how innovations in foundational models are reshaping legal research tools. By transitioning from simplistic models to more sophisticated systems, the legal industry can leverage AI more effectively to enhance legal research capabilities. The post highlights the importance of understanding the underlying mechanisms of AI technologies, moving beyond the initial assumptions that better AI models directly correlate with better outcomes in legal research. Advantages of Advanced Legal AI Systems The evolution of legal AI technologies presents numerous advantages for legal professionals, including: 1. Enhanced Accuracy in Legal Research Advanced AI systems, particularly those utilizing Agentic Retrieval Augmented Generation (RAG), allow for more accurate contextual understanding and retrieval of legal information. These systems leverage Knowledge Graphs to map relationships and hierarchies within legal texts, moving beyond simple keyword matching. This capability significantly reduces the likelihood of errors, such as “hallucinations,” where AI-generated text may misrepresent legal facts. 2. Improved Efficiency The integration of AI into legal research expedites the process of information retrieval. By utilizing vector databases and knowledge structures, lawyers can access relevant information swiftly, thereby saving time and enabling them to focus on higher-level analytical tasks. This efficiency is particularly crucial in high-stakes environments where timely access to accurate data can influence case outcomes. 3. Facilitated Decision-Making AI systems equipped with decision-making capabilities can guide legal professionals in understanding complex legal scenarios. By reasoning through relationships and hierarchies, these systems help lawyers assess how various legal precedents interact, thereby supporting more informed decision-making processes. 4. Adaptability to Complex Legal Queries The limitations of traditional legal research methods are exacerbated when faced with complex legal queries. Advanced AI systems, particularly those employing Agentic RAG, offer a more sophisticated approach to navigating legal intricacies. They can differentiate between various types of legal documents—such as dissenting opinions and majority holdings—allowing for a more nuanced understanding of the law. Caveats and Limitations Despite these advantages, there are notable caveats to consider. The reliance on advanced AI systems necessitates continuous oversight to ensure accuracy and adherence to legal standards. Furthermore, while these technologies enhance efficiency and accuracy, they must be integrated thoughtfully to avoid over-reliance on AI, which could undermine critical legal reasoning and strategy. Future Implications As AI technologies continue to evolve, their implications for the legal industry will be profound. Future advancements may lead to even more sophisticated AI systems capable of understanding and processing legal nuances at a level comparable to human expertise. Moreover, as these technologies become increasingly integrated into legal workflows, legal professionals will need to adapt their skills, balancing the advantages of AI with the irreplaceable value of human judgment and ethical considerations. The potential for AI to transform legal practices is significant, paving the way for more efficient, equitable, and informed legal services in the future. In conclusion, understanding the trajectory of AI in the legal sector is essential for legal professionals aiming to harness these innovations effectively. The evolution from basic retrieval systems to advanced, context-aware decision-making tools marks a critical juncture in legal research capabilities, promising enhanced accuracy, efficiency, and adaptability for the future of legal practice. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Context In the rapidly evolving landscape of artificial intelligence, Gradio has emerged as a pivotal open-source Python package for constructing AI-driven web applications. Specifically, Gradio’s adherence to the Model Context Protocol (MCP) provides a robust framework for hosting numerous MCP servers on platforms such as Hugging Face Spaces. The latest version, 5.38.0, introduces significant enhancements aimed at optimizing user experience and operational efficiency for developers and end-users alike. These advancements are particularly pertinent to Generative AI (GenAI) scientists, who rely on seamless integration and functionality to facilitate their research and application development. Main Goals and Achievements The primary objective of the recent updates to Gradio’s MCP servers is to enhance usability, streamline workflows, and reduce the manual overhead typically associated with deploying AI applications. This is achieved through several innovative features that enable more efficient interactions between users and the AI systems they utilize. For instance, the introduction of the “File Upload” MCP server allows for direct file uploads, eliminating the need for public URLs, thereby simplifying data handling. This improvement is crucial for GenAI scientists who require rapid iterations and testing in their workflows. Advantages of the New Features Seamless Local File Support: The ability to upload files directly to Gradio applications significantly reduces friction in workflows. By negating the necessity for public file URLs, researchers can focus more on their analysis instead of file management. Real-time Progress Notifications: The implementation of progress streaming allows developers to keep users informed about ongoing processes, enhancing user engagement and satisfaction. This real-time feedback is essential in applications where task completion times can vary considerably. Automated Integration of OpenAPI Specifications: The new capability of transforming OpenAPI specifications into MCP-compatible applications with a single line of code simplifies the integration of existing APIs. This automation saves time and reduces the potential for errors, which is particularly beneficial in high-stakes environments like GenAI. Enhanced Authentication Mechanisms: Improved handling of authentication headers through the use of gr.Header allows for clearer communication of required credentials. This transparency is vital for security and user trust, particularly when sensitive data is involved. Customizable Tool Descriptions: The ability to modify tool descriptions enhances clarity and usability. By allowing developers to provide specific descriptions for their tools, user comprehension and interaction can be significantly improved. Future Implications The advancements made in Gradio’s MCP servers signal a broader trend in the AI industry towards increasing automation and user-centric design. As artificial intelligence continues to mature, the integration of user-friendly features will be paramount in fostering adoption and innovation. For GenAI scientists, these developments will likely lead to enhanced capabilities in their research endeavors, enabling more complex models to be deployed with greater ease. Furthermore, as AI systems become more sophisticated, the demand for real-time interactivity and responsiveness will drive further innovations in tools like Gradio, making it an indispensable asset in the GenAI landscape. Conclusion The enhancements introduced in Gradio’s MCP servers provide a framework for more efficient and effective AI application development. By streamlining workflows, improving user experience, and facilitating easier integration with existing systems, these updates position Gradio as a leader in the domain of AI-powered web applications. As the field of Generative AI continues to evolve, tools like Gradio will play a critical role in shaping the future of AI research and application. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextual Background The ongoing dispute between Alexi Technologies and Fastcase, recently acquired by Clio, serves as a critical case study within the evolving landscape of LegalTech and artificial intelligence (AI). Alexi Technologies has formally responded to a lawsuit initiated by Fastcase, asserting counterclaims that allege anticompetitive practices. This legal confrontation highlights concerns regarding market dynamics in the AI legal research sector, particularly as consolidation among major players raises potential barriers for competition. The implications of this case extend beyond the parties involved, affecting legal professionals who rely on these technologies for efficient legal research and case management. Main Goals and Their Achievement The primary goal of Alexi’s counterclaims is to contest the legitimacy of Fastcase’s breach-of-contract allegations, which they argue are fabricated to undermine competition. By demonstrating the anticompetitive conduct of Fastcase and Clio, Alexi seeks not only to defend its position in the market but also to promote a fairer competitive environment within the legal technology sector. Achieving this goal necessitates a robust legal strategy that includes substantiating claims of anticompetitive behavior with concrete evidence and expert testimonies. Advantages of a Competitive LegalTech Market Innovation and Development: A competitive marketplace fosters innovation, driving advancements in AI and LegalTech tools that benefit legal professionals by enhancing efficiency and accuracy in legal research. Diverse Options: Legal practitioners gain access to a broader range of tools and services, allowing for personalized solutions that cater to specific needs and preferences. Cost-Effectiveness: Competition typically leads to lower prices, making advanced legal technologies more accessible to smaller firms and solo practitioners, thereby democratizing access to cutting-edge tools. Quality Improvement: As companies strive to maintain a competitive edge, the overall quality of services and products in the LegalTech market is likely to improve, directly benefiting end-users. However, it is important to acknowledge potential caveats. For instance, consolidation in the LegalTech sector may lead to reduced diversity in offerings if dominant players prioritize their products over innovative solutions from smaller companies. Future Implications of AI Developments The implications of this lawsuit and the broader developments in AI will fundamentally reshape the LegalTech landscape. As AI technologies continue to advance, their integration into legal research and practice will likely enhance capabilities for legal professionals, enabling more sophisticated data analysis and predictive modeling. However, if anticompetitive practices persist, smaller firms may struggle to compete, stifling innovation and limiting the benefits of AI advancements. The resolution of this lawsuit will set a precedent that could influence regulatory approaches to mergers and acquisitions within the LegalTech industry, shaping the future interplay between competition and technological advancement. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

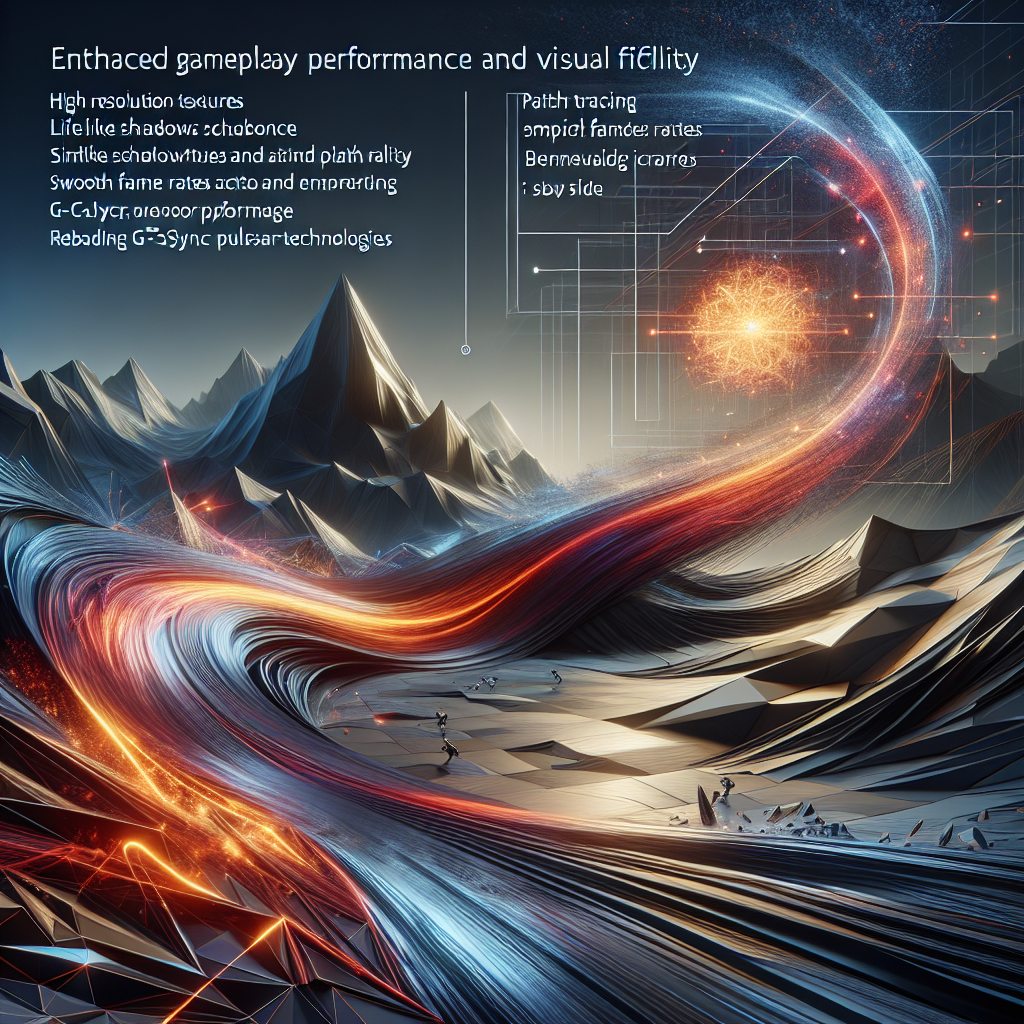

Context and Overview At the forefront of technological innovation, NVIDIA has unveiled its latest advancements in gaming technology during the CES trade show. The introduction of DLSS 4.5, featuring Dynamic Multi Frame Generation and a new 6X Multi Frame Generation mode, marks a significant enhancement in gaming performance and visuals. With over 250 games now supporting this cutting-edge technology, NVIDIA is setting new standards for immersive gaming experiences. Furthermore, the integration of AI technologies, such as NVIDIA ACE and RTX Remix Logic, showcases the growing intersection between gaming and artificial intelligence, which holds profound implications for the Generative AI Models & Applications industry. Main Goals and Achievements The primary goal articulated in the original post is to enhance gaming performance and visual fidelity through advanced technologies, particularly DLSS 4.5. This objective can be achieved by leveraging the capabilities of the GeForce RTX 50 Series GPUs, which allow for increased frame rates and superior image quality. The implementation of Dynamic Multi Frame Generation enables gamers to experience fluid gameplay, even in graphically intensive scenarios. This progress not only elevates the gaming experience but also demonstrates the potential for AI-driven technologies to revolutionize interactive entertainment. Advantages of NVIDIA’s Innovations Enhanced Frame Rates: DLSS 4.5’s Dynamic Multi Frame Generation allows for the generation of up to five additional frames per traditional frame, significantly boosting performance. This translates into smoother gameplay experiences, particularly in demanding titles. Widespread Compatibility: With over 250 games and applications now supporting DLSS 4 technology, gamers have an extensive library of titles to choose from, ensuring accessibility and variety. AI Integration: The NVIDIA ACE technology allows for the creation of intelligent NPCs that can enhance gameplay through contextual understanding and adaptive responses, thus providing a more immersive experience. Dynamic Graphics Modding: RTX Remix Logic enables modders to implement real-time graphics effects, thereby enhancing classic games and making them more appealing to new audiences without requiring direct access to the game’s source code. G-SYNC Pulsar Monitors: The introduction of G-SYNC Pulsar monitors, which offer over 1,000Hz effective motion clarity, ensures a tear-free visual experience and contributes to improved gameplay precision. However, it is essential to consider certain caveats, such as the requirement for advanced hardware to fully leverage these innovations and the potential steep learning curve associated with modding technologies. Future Implications The advancements in AI and gaming technology herald a transformative era for the Generative AI Models & Applications sector. As AI capabilities continue to evolve, we can anticipate significant developments in the way games are designed and experienced. Future iterations of AI technologies may lead to even more sophisticated NPC behaviors and dynamic game environments that react intelligently to player actions. Moreover, the integration of AI in gaming could facilitate the creation of personalized gaming experiences, where AI tailors gameplay based on individual player preferences and behaviors. This would not only enhance user engagement but also pave the way for innovative applications of AI in other industries, such as education, training simulations, and interactive storytelling. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Context and Overview The case of G.G. v. Salesforce.com, Inc. highlights critical concerns surrounding tertiary liability in the context of the Fight Online Sex Trafficking Act (FOSTA). In this instance, Salesforce, a prominent vendor for Backpage, found itself entangled in a legal dispute due to its indirect involvement in facilitating an online platform associated with sex trafficking. This situation underscores the complexities of liability in digital marketplaces, particularly when evaluating the role of service providers who operate several degrees removed from the direct victim of illicit activities. Within the scope of FOSTA, the legal expectations concerning liability for online platforms have been warped, compelling courts to interpret vague statutory language in light of egregious facts. Salesforce’s predicament illuminates the broader implications for vendors and service providers in the rapidly evolving landscape of LegalTech and artificial intelligence (AI). As these technologies permeate the legal sector, understanding liability risks is paramount for legal professionals and tech companies alike. Main Goals and Achievements The principal goal articulated in the original post is to examine how tertiary liability claims, particularly under FOSTA, challenge conventional legal frameworks and impose unexpected burdens on service providers. This aim can be achieved through a critical analysis of the legal precedents set by cases like Salesforce’s, which compel vendors to perform rigorous risk assessments regarding the legality of their clients’ operations. Legal professionals must adapt to these evolving legal standards and incorporate comprehensive compliance strategies to mitigate liability risks. Advantages of Understanding Tertiary Liability Enhanced Risk Management: Legal professionals equipped with knowledge of tertiary liability can implement more robust risk management strategies. Awareness of potential legal exposures enables firms to proactively address compliance issues before they escalate into litigation. Informed Client Advisement: Understanding the nuances of liability claims allows legal consultants to provide informed advice to clients, particularly those in industries susceptible to FOSTA implications. This proactive approach can safeguard clients against unforeseen legal challenges. Strengthened Vendor Relationships: By comprehending the liability landscape, vendors can cultivate stronger partnerships with their clients. Vendors who demonstrate an understanding of legal obligations are likely to foster trust and collaboration. Limitations and Caveats Despite the advantages, there are inherent limitations in addressing tertiary liability through legal frameworks. The ambiguity of statutory language in FOSTA can lead to inconsistent judicial interpretations, creating uncertainty for vendors attempting to navigate compliance. Furthermore, the evolving nature of digital marketplaces necessitates continual updates to risk management practices, which may strain resources for smaller firms. Future Implications of AI Developments The proliferation of AI technologies in the legal sector is poised to significantly impact how liability is assessed and managed. As AI systems become more adept at identifying and mitigating risks, they could provide legal professionals with tools to automate compliance checks and monitor client activities. This capability may enhance the ability to predict potential legal exposures, ultimately fostering a proactive legal culture. Moreover, the integration of AI-driven analytics could allow for more nuanced interpretations of legal liability, potentially influencing future case law and statutory revisions. As AI continues to evolve, legal professionals must remain vigilant, adapting their practices to align with both technological advancements and shifting legal standards. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Context of Legal AI Advancements In the rapidly evolving landscape of Legal Technology (LegalTech), the integration of artificial intelligence (AI) presents transformative opportunities for in-house legal teams. A notable recent development is the $10 million seed funding received by Sandstone, a pioneering legal AI platform, led by Sequoia Capital and supported by over 20 general counsels (GCs) and legal sector experts. Sandstone is designed to revolutionize legal workflows by converting institutional knowledge into dynamic, agentic processes that enhance operational efficiency. Main Goals of Sandstone At the core of Sandstone’s mission is the objective to streamline the management of legal workflows by creating an AI system that continuously learns from user interactions. This system aims to: Transform dispersed institutional knowledge into actionable workflows. Facilitate the rapid deployment of legal agents, enabling automation of intake, triage, and workflows through platforms such as Slack and Salesforce. Establish ‘context-in-motion’, linking workflows with the underlying business context to ensure consistent application of expertise across teams. Advantages of Implementing AI in Legal Workflows Implementing an AI-driven platform like Sandstone offers several advantages that can significantly enhance the productivity and effectiveness of legal teams: Enhanced Knowledge Management: By consolidating institutional knowledge, Sandstone allows teams to access crucial information swiftly, reducing the time spent searching for answers and documentation. Increased Operational Efficiency: The ability to deploy legal agents within ten minutes empowers teams to automate repetitive tasks, thereby allowing legal professionals to focus on more strategic activities. Improved Collaboration: Integrating with existing tools such as email and project management software facilitates seamless communication and collaboration within teams, minimizing the ‘context-switching’ that often hinders productivity. Competitive Advantage: By ensuring that expertise is shared and preserved within the team, organizations can maintain a competitive edge in a rapidly changing legal environment. Caveats and Limitations While the benefits of adopting AI systems in legal operations are substantial, there are several caveats to consider: Initial Investment and Implementation Challenges: The integration of AI solutions may require substantial upfront investment and careful planning to ensure effective implementation. Dependence on Quality Data: The effectiveness of AI systems hinges on the quality of data provided; inadequate data may lead to suboptimal outcomes. Change Management: Organizations may face resistance to adopting new technologies, necessitating robust change management strategies to facilitate user buy-in. Future Implications of AI in Legal Services The landscape of LegalTech is poised for significant transformation. As AI technologies mature, the focus will shift from basic automation to advanced, context-aware systems that can understand and execute complex legal workflows. In the coming years, it is anticipated that: Legal AI solutions will evolve to provide a more tailored approach, adapting to the unique contexts and needs of individual legal departments. The market will likely move away from fragmented point solutions, favoring comprehensive platforms that serve as central hubs for all legal operations. The role of legal professionals will evolve, with a greater emphasis on strategic thinking and problem-solving, as AI takes over routine administrative tasks. In conclusion, the integration of AI into legal workflows, as exemplified by Sandstone’s recent funding and innovative approach, signifies a pivotal shift in how legal teams operate. By embracing these advancements, legal professionals can enhance their efficiency, reduce administrative burdens, and ultimately provide greater value to their organizations. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Contextual Overview The NeurIPS conference consistently showcases groundbreaking research that influences the trajectory of artificial intelligence (AI) and machine learning (ML). The 2025 conference presented pivotal papers that interrogate established beliefs within the field, particularly regarding the scaling of models, the efficacy of reinforcement learning (RL), and the architecture of generative models. The prevailing notion that larger models equate to superior reasoning capabilities is increasingly being challenged. Instead, the focus is shifting toward the importance of architectural design, training dynamics, and evaluation strategies as core determinants of AI performance. This shift underscores the evolving landscape of generative AI models and their applications, emphasizing the role of representation depth in scaling reinforcement learning effectively. Main Goal and Its Achievement The central objective of the discussions emerging from NeurIPS 2025 is to reframe the understanding of AI scalability and effectiveness. Specifically, it posits that the limitations of reinforcement learning are not merely a function of data volume but are significantly influenced by the depth and design of the model architecture. Achieving this goal necessitates a paradigm shift in how AI practitioners approach model training and evaluation. By integrating deeper architectures and innovative training approaches, practitioners can enhance the capabilities of generative AI systems, thus fostering more robust and adaptable AI applications. Advantages of the New Insights 1. **Enhanced Model Performance**: Adopting deeper architectures allows for significant improvements in model performance across various tasks, particularly in reinforcement learning scenarios, where traditional wisdom suggested limitations. 2. **Improved Diversity in Outputs**: By implementing metrics that measure the diversity of outputs rather than mere correctness, models can be trained to generate a wider array of responses, enhancing creativity and variety in applications. 3. **Architectural Flexibility**: The introduction of simple architectural adjustments, such as gated attention mechanisms, reveals that significant performance gains can be achieved without the need for complex changes, making improvements more accessible. 4. **Predictable Generalization**: Understanding the dynamics of model training can lead to more predictable generalization in overparameterized models, such as diffusion models, thus reducing the risk of overfitting and enhancing reliability. 5. **Refined Training Pipelines**: Reevaluating the role of reinforcement learning allows for more effective integration of various training methodologies, promoting a holistic approach to model capability enhancement. *Limitations*: While these advantages present promising avenues for development, challenges such as the need for rigorous evaluation metrics and potential biases in model outputs remain pertinent. Adopting new strategies must be accompanied by a critical assessment of their implications on model fairness and representativeness. Future Implications The implications of these insights for the future of AI are profound. As the focus shifts from merely increasing model size to optimizing system design, AI practitioners will need to develop a more nuanced understanding of architectural elements that contribute to model success. This evolution is likely to lead to more sophisticated applications of generative AI across industries, from creative sectors to complex decision-making systems. In particular, the emphasis on representation depth and architectural tuning may enable the development of AI models that are not only more capable but also more aligned with human-like reasoning processes. As the field continues to advance, the interplay between architectural design and learning dynamics will likely dictate the next wave of breakthroughs in AI, reshaping the landscape of generative models and their applications. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here

Introduction In the current landscape of technology, the advent of Generative AI (GenAI) presents both unprecedented opportunities and considerable risks, particularly in sectors such as LegalTech. As AI becomes increasingly integrated into everyday practices, the potential for what is termed “enshittification” emerges—a phenomenon characterized by a gradual degradation of service quality as companies prioritize profits over user experience. This blog post draws from the insights of industry thought leaders to examine the implications of GenAI for legal professionals and to propose strategies to mitigate associated risks. Contextualizing the GenAI Siren Song The allure of GenAI is reminiscent of the mythical Sirens from Homer’s The Odyssey. Modern AI systems promise valuable insights and efficiency that beckon users closer, much like the Sirens’ enchanting song. However, as Sam Altman noted, the integration of advertising into these AI platforms raises concerns about their impact on the user experience. The temptation to rely on these tools without critical evaluation could lead legal professionals into a precarious position—one where they may unwittingly sacrifice their autonomy and judgment. Main Goal and Its Achievement The primary goal articulated in the original discourse is to instill a cautious approach toward the adoption of GenAI in legal practices. By acknowledging the risks associated with over-reliance on these tools, legal professionals can better navigate the complexities of their implementation. Achieving this goal requires a commitment to critical thinking, ongoing education, and a willingness to question the motives behind AI advancements. Legal professionals must establish a framework that prioritizes ethical considerations alongside technological integration. Advantages of a Cautious Approach Enhanced Critical Thinking: Emphasizing skepticism towards GenAI encourages legal professionals to maintain their analytical skills, ensuring that they do not become overly reliant on automated outputs. Improved Ethical Standards: By scrutinizing the potential biases and manipulative practices inherent in AI algorithms, legal professionals can uphold the integrity of their work and protect clients’ interests. Informed Decision-Making: A cautious approach allows for a comprehensive understanding of the tools at hand, enabling legal professionals to make educated decisions about when and how to utilize GenAI effectively. Mitigation of Risks: By recognizing the enshittification process, legal professionals can proactively seek alternatives or leverage multiple platforms, thereby reducing dependence on a single service provider. Caveats and Limitations While the advantages of a cautious approach are significant, there are limitations to consider. The rapid pace of AI development may outstrip the ability of legal professionals to keep pace with emerging technologies. Furthermore, the intrinsic nature of competitive advantage in technology may compel some firms to adopt GenAI solutions hastily, potentially leading to uneven adoption rates across the industry. Future Implications of AI in LegalTech As GenAI continues to evolve, its impact on the legal sector is poised to intensify. Future developments may include more sophisticated models that blur the line between human judgment and algorithmic decision-making. Legal professionals must remain vigilant about the potential for reduced accountability and the ethical implications of relying on AI-generated outputs. Moreover, the increasing prevalence of advertisements within AI responses could compromise the objectivity and reliability of the information provided, necessitating a more robust regulatory framework to safeguard against such degradation. Conclusion The integration of GenAI into LegalTech presents both significant opportunities and serious risks. By adopting a cautious approach, legal professionals can harness the benefits of these advancements while safeguarding their autonomy and upholding the standards of their practice. It is imperative to remain grounded in critical thinking and ethical considerations as the industry navigates the complexities of AI technology. Disclaimer The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly. Source link : Click Here