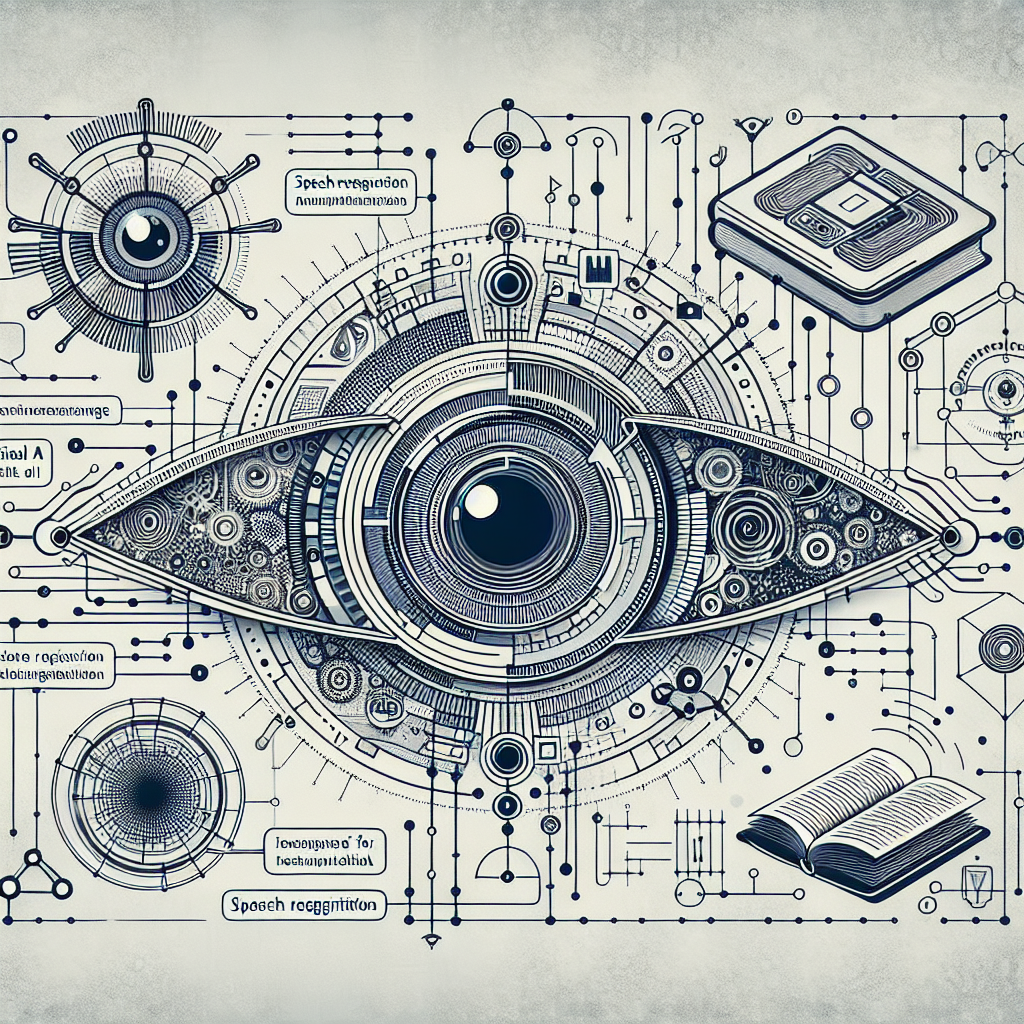

In recent years, the field of artificial intelligence (AI) has experienced a significant transformation, primarily characterized by the advent of multimodal AI systems. These systems possess the capability to interpret and analyze various forms of data, including images, audio, and text, thereby allowing them to comprehend information in its inherent format. This characteristic marks a notable advancement in Natural Language Understanding (NLU) and Language Understanding (LU), fields essential for developing intelligent systems capable of engaging in human-like interactions. The implications of multimodal AI extend beyond mere technological advancements; they redefine the paradigms through which AI interacts with the world.

The principal objective of multimodal AI is to integrate diverse data modalities to enhance the understanding and generation of human language. By combining visual, auditory, and textual inputs, these systems can provide a more nuanced interpretation of context and intent, ultimately improving communication between humans and machines. Achieving this goal necessitates sophisticated algorithms that can process and synthesize information from different sources, leading to more accurate responses and an enriched user experience.

Despite these advantages, it is essential to acknowledge certain limitations. The complexity of developing multimodal AI systems can lead to increased resource requirements, both in terms of data processing and algorithm training. Additionally, ensuring the accuracy and reliability of outcomes across different modalities remains a significant challenge that requires ongoing research and development.

The evolution of multimodal AI is poised to have profound implications for the future of Natural Language Understanding. As advancements continue, we can anticipate more intuitive and responsive AI systems that seamlessly integrate into everyday life. These developments are likely to enhance accessibility, allowing individuals with diverse communication needs to interact more effectively with technology. Furthermore, the convergence of AI with emerging technologies such as augmented reality (AR) and virtual reality (VR) may catalyze entirely new modes of interaction, fundamentally changing how humans engage with machines.

Disclaimer

The content on this site is generated using AI technology that analyzes publicly available blog posts to extract and present key takeaways. We do not own, endorse, or claim intellectual property rights to the original blog content. Full credit is given to original authors and sources where applicable. Our summaries are intended solely for informational and educational purposes, offering AI-generated insights in a condensed format. They are not meant to substitute or replicate the full context of the original material. If you are a content owner and wish to request changes or removal, please contact us directly.

Source link :